When I was first remaking my personal site (the one you're on right now), I decided I wanted to solve one of the major annoyances I've had with my about page.

For some context, the about page is statically generated from my GitHub profile README.md for the sake of my laziness. I use little GitHub profile badges that kind of look like this:

To do this, I use a getStaticProps function to fetch my GitHub profile README.md and serialize it using next-mdx-remote:

export async function getStaticProps() {

const repo = `${env.NEXT_PUBLIC_GH_USER}/${env.NEXT_PUBLIC_GH_USER}`;

const res = await fetch(

`https://raw.githubusercontent.com/${repo}/master/README.md`,

);

const source = await res.text();

const mdxSource = await serialize(source, {

mdxOptions: { format: "md", rehypePlugins: [rehypeRaw] },

});

return { props: { mdxSource } };

}Then, by utilizing MDXRemote and @tailwindcss/typography, I can render the markdown content with support for dark mode:

function About({ mdxSource }: InferGetStaticPropsType<typeof getStaticProps>) {

return (

<div

suppressHydrationWarning

className="prose dark:prose-invert prose-img:m-0 prose-img:inline"

>

<MDXRemote {...mdxSource} />

</div>

);

}But the badge icon images take forever to load! What do I do?!?!

This happens because the images are being served directly from source, which presumably has no CDN to catch potential cache hits. When you view a README.md on GitHub, it uses an image reverse proxy with an image caching layer to make it so snappy.

So the solution is to implement image caching. But how?

Ah right, silly me. I'm using Next.js, which means I should be able to take advantage of the next/image package for image optimization/caching right?

Well when I first started working on this site, I had the brilliant idea of wanting it to be hosted on GitHub pages so that all my individual repository-specific pages will be routed by GitHub automagically. So unfortunately, I can't take advantage of Vercel's image caching with next/image.

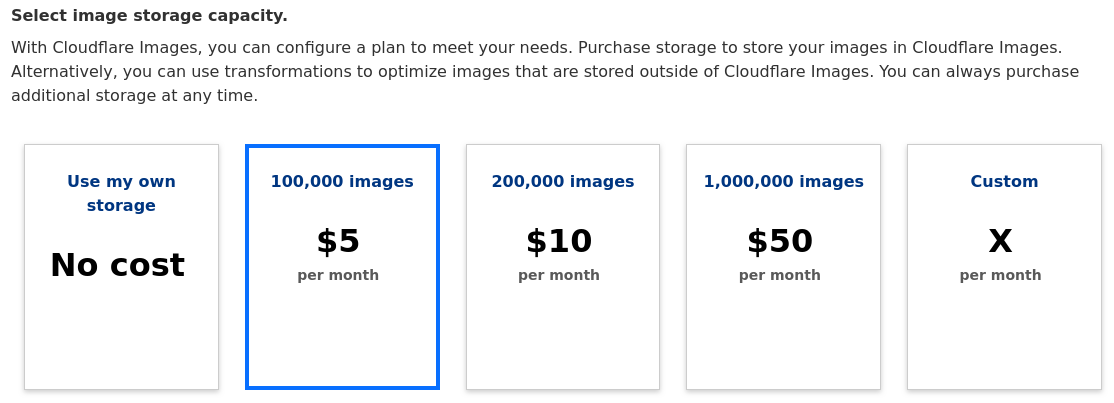

Cloudflare?

Well the fantastic thing about the next/image package, is that it supports a variety of custom "loaders" that allow for different CDNs do be used for image caching.

Well I've been wanting to switch over to using Cloudflare nameservers for a long while. So I might as well make the switch and get a CDN along with it, right?

So I logged into my dashboard, went over to NameCheap, and pointed my nameservers to CloudFlare, and...

Piggybacking Off GitHub's Own CDN

I'm not broke but I really don't wanna pay $5 a month for my silly little website. Remember how GitHub has its own image caching layer for its profile badges? Well, why not just piggyback off of that?

So lets add some more functionality to getStaticProps:

export async function getStaticProps() {

const repo = `${env.NEXT_PUBLIC_GH_USER}/${env.NEXT_PUBLIC_GH_USER}`;

const res = await fetch(

`https://raw.githubusercontent.com/${repo}/master/README.md`,

);

let source = await res.text();

for (const match of source.matchAll(

/(?:<img.*?src=['"](.+?)['"])|(?:!\[.*?\]\((.+?)\))/gi,

)) {

let unescapedUrl = match.at(1) ?? match.at(2);

if (unescapedUrl == null) {

continue;

}

unescapedUrl = unescapedUrl.trim();

source = source.replaceAll(unescapedUrl, he.decode(unescapedUrl));

}

const mdxSource = await serialize(source, {

mdxOptions: { format: "md", rehypePlugins: [rehypeRaw] },

});

return { props: { mdxSource } };

}What we're doing here is simply looking for all the <img /> /  markdown image blocks within our source. We then he to decode all the HTML entities in each URL and replace the original URL with it. This step might seem insignificant, but it will be important later.

Now we can just get the HTML page of the rendered markdown on GitHub, and extract the Camo CDN URLs from the image tags and replace the original URLs with them.

export async function getStaticProps() {

const repo = `${env.NEXT_PUBLIC_GH_USER}/${env.NEXT_PUBLIC_GH_USER}`;

const res = await fetch(

`https://raw.githubusercontent.com/${repo}/master/README.md`,

);

let source = await res.text();

for (const match of source.matchAll(

/(?:<img.*?src=['"](.+?)['"])|(?:!\[.*?\]\((.+?)\))/gi,

)) {

let unescapedUrl = match.at(1) ?? match.at(2);

if (unescapedUrl == null) {

continue;

}

unescapedUrl = unescapedUrl.trim();

source = source.replaceAll(unescapedUrl, he.decode(unescapedUrl));

}

const profileRes = await fetch(`https://github.com/${repo}`);

const profile = await profileRes.text();

for (const match of profile.matchAll(

/src="(.*?)".*?data-canonical-src="(.*?)"/g,

)) {

if (match[1] == null || match[2] == null) {

continue;

}

const camoUrl = he.decode(match[1].trim());

const realUrl = he.decode(match[2].trim());

source = source.replaceAll(realUrl, camoUrl);

}

const mdxSource = await serialize(source, {

mdxOptions: { format: "md", rehypePlugins: [rehypeRaw] },

});

return { props: { mdxSource } };

}The regex here simply matches the src and data-canonical-src attributes of the <img /> tags on the GitHub profile page. We can do this because GitHub will expand all image blocks to include both the original URL and the Camo CDN URL.

Notice that he.decode is necessary here, because GitHub may encode characters in the original image URL with HTML entities. This was the reason we had to use he.decode on the URLs in the original markdown source, so that any URLs that potentitally contain any HTML entities can be correctly matched to the URLs that appear here.